Principal components analysis

July 12, 2021 6 min read

Principal components analysis is a ubiquitous method of dimensionality reduction, used in various fields from finance to genomics. In this post I'm going to consider PCA from different standpoints, resulting in various perspectives on it.

Principal components analysis was discovered multiple times throughout the history. The most relevant of them are Pearson’s use of it in 1901 and, later, development by Hotelling in the 1930s, who coined the name and popularized the method as a generic tool.

Optimization problem perspective

Identify axes, which preserve the most information on original data

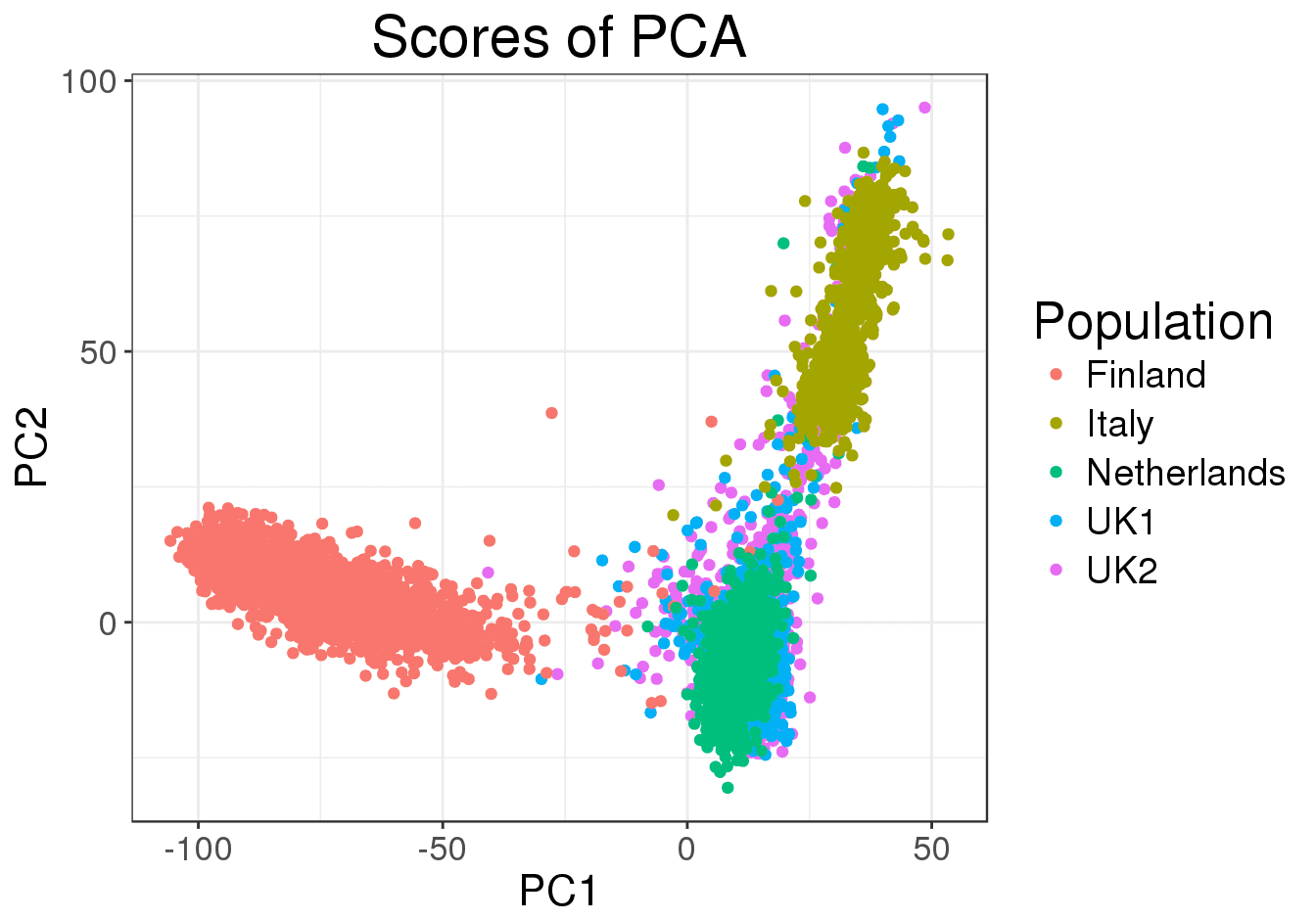

Suppose that we’ve obtained -dimensional data points. For instance, let be the number of sequenced Russian citizen, and polymorphic single-nucleotide polymorphisms that characterize each Russian citizen. We want to clusterize the Russian population into sub-populations and visually inspect the results, by projecting each person from the initial 300,000-dimensional space onto a lower-dimensional space (2D or 3D).

For our 2D/3D representation, we want to select such axes, that preserve the most information about a person. To say it more formally, if we had one point, we want the distance from the real point to its projection onto our 2D/3D space to be minimal. Now that we have n points, we want the sum of squared distances from each of those points to its projection to be minimal.

E.g. if we chose to project the data onto a 2D-space, we are looking for such axes and , that sum of square distances from points to their projections were minimal:

Minimal sum of square distance to the axes maximum explained variance

Thus, by choosing such, that they minimize the distances from the points to their projections, we simultaneously maximize the lengths of the projections themselves.

Iterative optimization process

Observation: turns out, you can use a greedy algorithm to perform the required optimization.

Indeed, if the dimensionality of space, that we are going to project the data to, is , we can always choose an orthogonal basis in that m-dimensional subspace that we are looking for.

Thus, we can first find one axis , such that:

Then we should find , which is supposed to be orthogonal to so that:

, and is orthogonal to .

Next basis vector should be orthogonal to both and , and so on. Luckily, as we’ll see in the next chapter, PCA automatically makes each next axis orthogonal to the previous ones.

Reformulation as an eigenvectors/eigenvalues problem

Alright, how do we find a single vector, such that projection onto it is maximal? Pretty simple, if the axis vector is , the length of projection of data point onto it is .

We are looking to maximize the sum of squares of lengths of the projections over all the data points:

, where is a sample covariance matrix.

By the way, the quantity is called Rayleigh quotient, as the covariance matrix is symmetric.

, thus, , where .

Elements of vector, , are coefficients for i-th eigenvector; eigenvectors are orthogonal, because the covariance matrix is symmetric, thus .

Thus, .

If one of the eigenvalues, say, , is larger than the others, as it often happens, it is called main eigenvalue.

The Rayleigh quotient then will take its maximal value, if .

Indeed, let , then we maximize the sum for non-negative , given constraint . Obviously, we reach maximum, if we maximize , where is the largest eigenvalue.

Classical statistics perspective

From multivariate normal distribution perspective.

TODO

SVD perspective

As was mentioned previously, PCA works with covariance matrix , where is our data vectors.

From the technical perspective, this matrix is as a special case of well-known in mathematics Gram matrix.

Gram matrices are positive semi-definite and symmetric. Moreover, usually they are non-full rank, if is x matrix, where , which means that although has dimensionality , its rank is only and it has only non-zero eigenvalues.

Hence, a convenient technical way to find the eigenvalues of covariance matrix is Singular Value Decomposition:

, where and are orthogonal and matrices, and is a diagonal matrix of singular values (squares of singular values are eigenvalues of ), where only elements are non-zero.

For more details on SVD, read this post. For more details on properties of Gram matrix - read this one.

Stochastic processes and signal processing perspective

From the standpoint of Karhunen-Loeve theorem, PCA is similar to a Fourier series in digital signal processing - essentially, a way to perform a lossy compression.

We can interpret PCA as a decomposition of the input data matrix into an ordered series of matrices of rank 1, created by outer products of principal components. Let us re-write the SVD transform as a series of outer products:

, where are eigenvalues, are column-vectors of matrix and are row-vectors of matrix , so that is an outer-product, resulting in a matrix of rank 1.

Each term of this sum contributes more to the resulting data matrix, the larger is the eigenvalue in it. As in the case of Fourier transform, if we aim to compress the data, we can truncate the series early, when we believe that enough information is already contained in the first terms of the series. This approach is often used with SVD for data compression - e.g. eigenfaces approach, where whole datasets of human photos are compressed by early truncation of SVD series.

Bayesian optimization perspective

TODO: Vetrov’s lecture on VAE

References

Written by Boris Burkov who lives in Moscow, Russia, loves to take part in development of cutting-edge technologies, reflects on how the world works and admires the giants of the past. You can follow me in Telegram