Condition numbers

August 23, 2021 7 min read

The notion of condition numbers arises when you are studying the problem of numeric stability of solutions of ordinary linear equations systems (OLES). This concept is really important in such practical applications as least-squares fitting in regression problems or search of inverse matrix (which can be an inverse of covariance matrix in such machine learning applications as Gaussian processes). Another example of their use is the time complexity of quantum algorithms for solving OLES - complexity of those algorithms is usually a polynomial or (poly-) logarithmic function of condition numbers. This post gives a brief review of condition numbers.

Condition numbers are actually a more general term that can be applied not only to matrices, but to an arbitrary function. Moreover, their definition might vary, depending on the kind of problem you are solving (e.g. OLES solution or matrix inversion) and the kind of error (absolute or relative) you are measuring.

Informal explantation of the nature of condition numbers

The nature of condition numbers in case of inverse matrix problem is quite simple: if one of eigenvalues of your matrix is 0, the matrix determinant is 0, and it has no inverse. Such a matrix is called singular or degenerate.

If none of the eigenvalues is exactly 0, but one of them is close to 0, the matrix determinant is close to 0. Then it turns out that the error that you get, when numerically calculating inverse of such matrix, is huge. Such a matrix is called ill-conditioned.

However, it turns out that it is not the absolute value of the smallest eigenvalue that determines, if the matrix is well- or ill-conditioned, but (speaking vaguely) the ratio between the absolute values of the smallest and the largest ones. I’ll explain this below.

Condition numbers for stability of OLES solution

Let us formally define what a condition number is. We’ll need a few definitions.

First, let us define vector norm as a generalization of the notion of vector length, having the properties of being positive, sub-additive and linear in terms of multiplication by scalar. Let’s think of it as just a Euclidean vector length for now: .

Matrix norm (or, more generally, operator norm) is the largest length of vector, you can achieve, by multiplying any vector by this matrix, divided by the vector length:

, (when obviously).

For the purpose of study of numeric estimation of inverse matrix it is useful to consider the opposite quantity - the strongest shrinkage one can achieve by multiplying a vector by matrix:

It is the reciprocal of norm of matrix inverse: .

The ratio of the largest stretch of a vector to the largest shrink of a vector, created by matrix A, is called condition number: .

Why does this notion make sense? Consider a system of linear equations: . Suppose that you make an error in estimation of y:

, hence by subtracting , or . We know now the bound on absolute error on .

The bound on relative error follows from multiplication of two inequalities: and :

.

So, condition number shows the size of relative error of your numeric solution.

Matrix norms: operator norm vs spectral norm vs -norm vs Frobenius norm

Before we move on, we should make a short stop to clarify the notion of matrix norms.

There are several approaches to define matrix norms; they extend the notion of vector norm in different ways.

First approach, that we used above, extends the notion of generic operator norm to the case of matrices. It says, by how much at most the norm/length of vector can be larger than the norm/length of vector .

An important special case of operator norm is called spectral norm, which implies that the vector norm we use is actually Euclidean () norm, i.e. length.

Don’t confuse “spectral norm” (I won’t go deeper into the topic of singular numbers here, but will just mention that spectral norm of matrix A equals to the largest singular value of matrix , which equals to the square root of the largest eigenvalue of matrix ) with “spectral radius” (which is just the absolute value of the largest (in absolute value) eigenvalue of matrix ), they can be different in general case, see Gelfand’s formula. For normal matrices, i.e. orthogonal/unitary and symmetric/Hermitian matrices, though, singular values correspond to absolute values of eigenvalues.

Second approach treats matrix as a mere vector, effectively flattening its 2 directions into 1, and directly applies vector norm to it as though it were just a vector. These norms are sometimes called “entrywise”.

Again, an important special case of this kind of norm is called Frobenius norm, which is an application of the regular vector norm to our matrices.

Matrix inverse

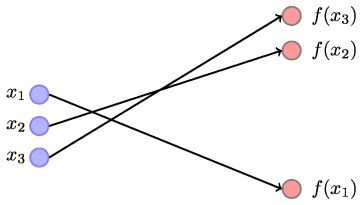

When you are solving the problem of matrix inversion, , each column of your inverse matrix is a solution of a linear equation , where is one of the columns of identity matrix, a one-hot vector with all, but one coordinates being 0, and one coordinate 1: .

Hence, for each column of matrix the relative error in its calculation, again, is determined by condition number. We can say that for each column x: .

If we assume that all the matrix columns had the same measure, we can directly express Frobenius norm of error of inverse matrix though condition numbers, not the operator/spectral norm.

L2-regularization from the condition numbers perspective

In regression problems an L2-regularization is often introduced in order to prevent the explosion of weights of the regression.

Here is how it works. In regression problems we look for weights vector that minimizes the sum of squares:

In order to prevent the solution from overfitting, it is a common practice to add an L2-norm Tichonov regularization term:

Why does this work? Because, upon minimization we take a derivative in each coordinate and end up with the following expression:

Now, if the matrix is ill-conditioned or even singular (i.e. has 0 eigenvalues), as it might be non-full-rank, if the number of elements of sum is less then dimensionality of the vectors , its inverse is either calculated with a huge error (if the smallest by absolute value eigenvalue is very little), or just does not exist at all (is the smallest by absolute value eigenvalue is 0, and the matrix is non-full-rank).

Regularization is our solution for such a problem, because it increments all the eigenvalues by regularization coefficient . Indeed, if is an eigenvalue of this matrix, and is its eigenvector:

Then is still an eigenvector for the regularized matrix C:

Thus, if is big enough, the smallest eigenvalue is at least , because all the eigenvalues of matrix are positive, because it is a Gram matrix, which is symmetric positive-definite.

References

- https://www.phys.uconn.edu/~rozman/Courses/m3511_18s/downloads/condnumber.pdf

- https://en.wikipedia.org/wiki/Spectral_radius - spectral radius is NOT matrix/operator norm in general case

- https://en.wikipedia.org/wiki/Condition_number

- https://www.sjsu.edu/faculty/guangliang.chen/Math253S20/lec7matrixnorm.pdf - matrix norms, low-rank approximations etc.

- https://www.scribd.com/document/501501948/Error-Analysis-of-Direct-Methods-of-Matrix-Inversion

- https://web2.qatar.cmu.edu/~gdicaro/10315-Fall19/additional/welling-notes-on-kernel-ridge.pdf - Max Welling lecture on KRR mensions the way regularization works

- https://www.quora.com/Are-the-eigenvalues-of-a-matrix-unchanged-if-a-constant-is-added-to-each-diagonal-element - addition of regularization increases the eigenvalues

Written by Boris Burkov who lives in Moscow, Russia, loves to take part in development of cutting-edge technologies, reflects on how the world works and admires the giants of the past. You can follow me in Telegram