Quadratic programming

December 06, 2021 5 min read

Solution of quadratic programming problem in polynomial time by Soviet mathematicians in the late 1970s - early 1980s paved the way for several important machine learning methods of 1990s, such as Lasso/ElasticNet regression (with its L1 regularization) and Support Vector Machines (SVM). These methods are incredibly useful, because they produce sparse solutions, effectively serving as a proxy for L0 regularization, in feature space/data point space respectively. Thanks to QP magic, they manage to do this in polynomial time, while straightforward application of L0 norm is NP-hard and cannot be done efficiently.

Problem statement

General case of quadratic programming problem is stated as follows.

Suppose, that you have a vector of multiple variables . given by a quadratic form + a linear bias

You have a function of those variables, which is a sum of quadratic term and linear term .

Here quadratic term is given by a quadratic form .

The linear term is given by a dot product of with bias weights vector: .

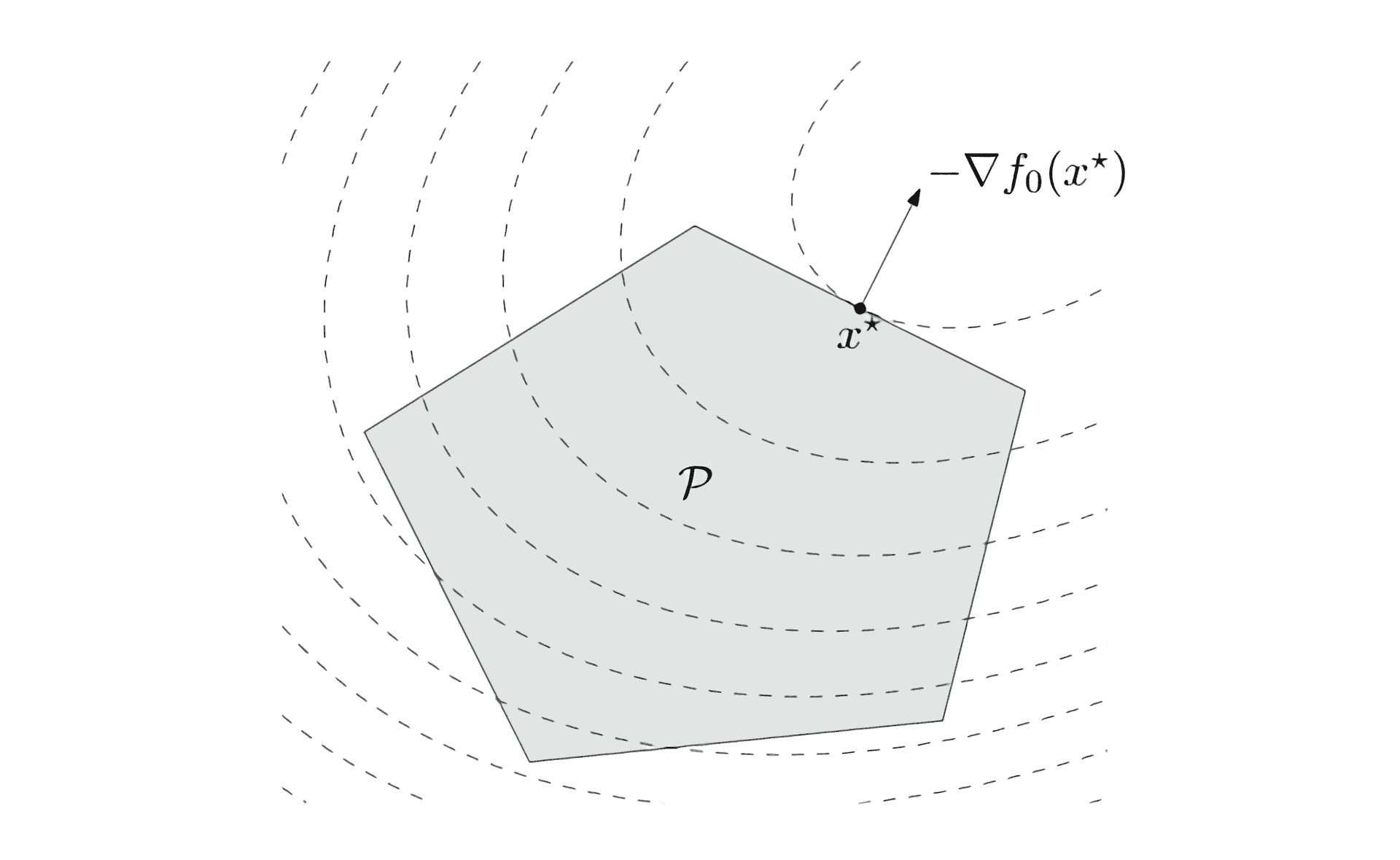

We also have a set of constraints in the form of linear inequalities, that delimit the possible solution to a convex subset of your input subspace:

, where and

These constraints are called Karush-Kuhn-Tucker.

Solution in case of strict equality

In case Karush-Kuhn-Tucker conditions are strict equalities, not inequalities, the solution becomes fairly straightforward.

We shall just apply Lagrange multiplier method and find the conditional minimum of our system on variables and , where are Lagrange multipliers.

Just add equations to and take the partial derivatives on , equating them to zero:

and also we have the equations themselves.

Thus, solution takes form of a system of linear equations with variables ( and ):

or, in short notation:

Thus, solution is straightforward.

References

- https://www.math.uh.edu/~rohop/fall_06/Chapter3.pdf - great paper on square programming techniques

- http://www.apmath.spbu.ru/cnsa/pdf/monograf/Numerical_Optimization2006.pdf - a magnum opus on numerical optimization by Wright and Nocedal

- http://www.ccgalberta.com/ccgresources/report13/2011-113_comparing_optimization_methods.pdf - on sequential quadratic programming compared to gradient descent and interior point method

- http://www.mathnet.ru/links/b3b151bdcecb5b2554cc126c89a4373e/zvmmf5189.pdf - soviet paper on solution of quadratic programming

- https://ru.wikipedia.org/wiki/%D0%9A%D0%B2%D0%B0%D0%B4%D1%80%D0%B0%D1%82%D0%B8%D1%87%D0%BD%D0%BE%D0%B5_%D0%BF%D1%80%D0%BE%D0%B3%D1%80%D0%B0%D0%BC%D0%BC%D0%B8%D1%80%D0%BE%D0%B2%D0%B0%D0%BD%D0%B8%D0%B5 - Russian wikipedia

- https://en.wikipedia.org/wiki/Taylor%27s_theorem - Taylor’s series derivation in univariate and multivariate cases

Written by Boris Burkov who lives in Moscow, Russia, loves to take part in development of cutting-edge technologies, reflects on how the world works and admires the giants of the past. You can follow me in Telegram