Lasso regression implementation analysis

February 15, 2022 12 min read

Lasso regression algorithm implementation is not as trivial as it might seem. In this post I investigate the exact algorithm, implemented in Scikit-learn, as well as its later improvements.

The computational implementation of Lasso regression/ElasticNet regression algorithms in Scikit-learn package is done via coordinate descent method. As I had to re-implement a similar L1 regularization method for a different problem of large dimensionality, I decided to study L1 regularization implementation from Scikit-learn in detail.

Scikit-learn Lasso regression implementation

Consider the problem we aim to solve. We need to minimize the weighted sum of 2 terms: first term is the sum of squares of regression residues, second term is L1 norm of regression weights with some hyperparameter :

Luckily, function is tractable, so it is easy to perform exact calculations of its gradient, hessian etc.

Thus, we don’t have to rely on the savvy techniques from numeric optimization theory, such as line search, Wolfe conditions etc.

Scikit-learn implementation of Lasso/ElasticNet uses a simple iterative strategy to find the optimum of this function. It iteratively does coordinate-wise descent. I.e. at each step of the algorithm it considers each of the coordinates one by one and minimizes relative to the coorindate . At the end of each step it checks, whether the largest update among the regression weights was larger than a certain tolerance parameter. If not, it finally checks the duality gap between the solution of primal and dual Lagrange problems for Lasso (more on the dual problem later), and if the gap is small enough, returns the weights and stops successfully. If dual gap has not converged, although regression weights almost stopped decreasing, it emits a warning.

Coordinate descent

At each step of our loop we will optimize each of the regression weights individually.

In order to do that, we will be calculating the partial derivative of the optimized function by each individual weight:

To find the new optimal value of weight we will be looking for a point, where our function takes the minimum value, i.e. its partial derivative on equals 0:

To write this result in a compact vector/matrix form, denote the vector of regression residues ,

where is the k-th column of matrix .

Then we can re-write in vector form:

.

Subgradient

Now, we should focus on the derivative of L1 regularization term: .

For it is trivial: .

However, it is undefined for , and we cannot ignore this case, as the whole purpose of L1 regularization is to keep most of our regression weights equal to 0.

The workaround from this situation is to replace the exact gradient with subgradient, which is a function less than or equal to the gradient in every point.

Now, the allowed values of the subgradient are bounded by the derivatives at and :

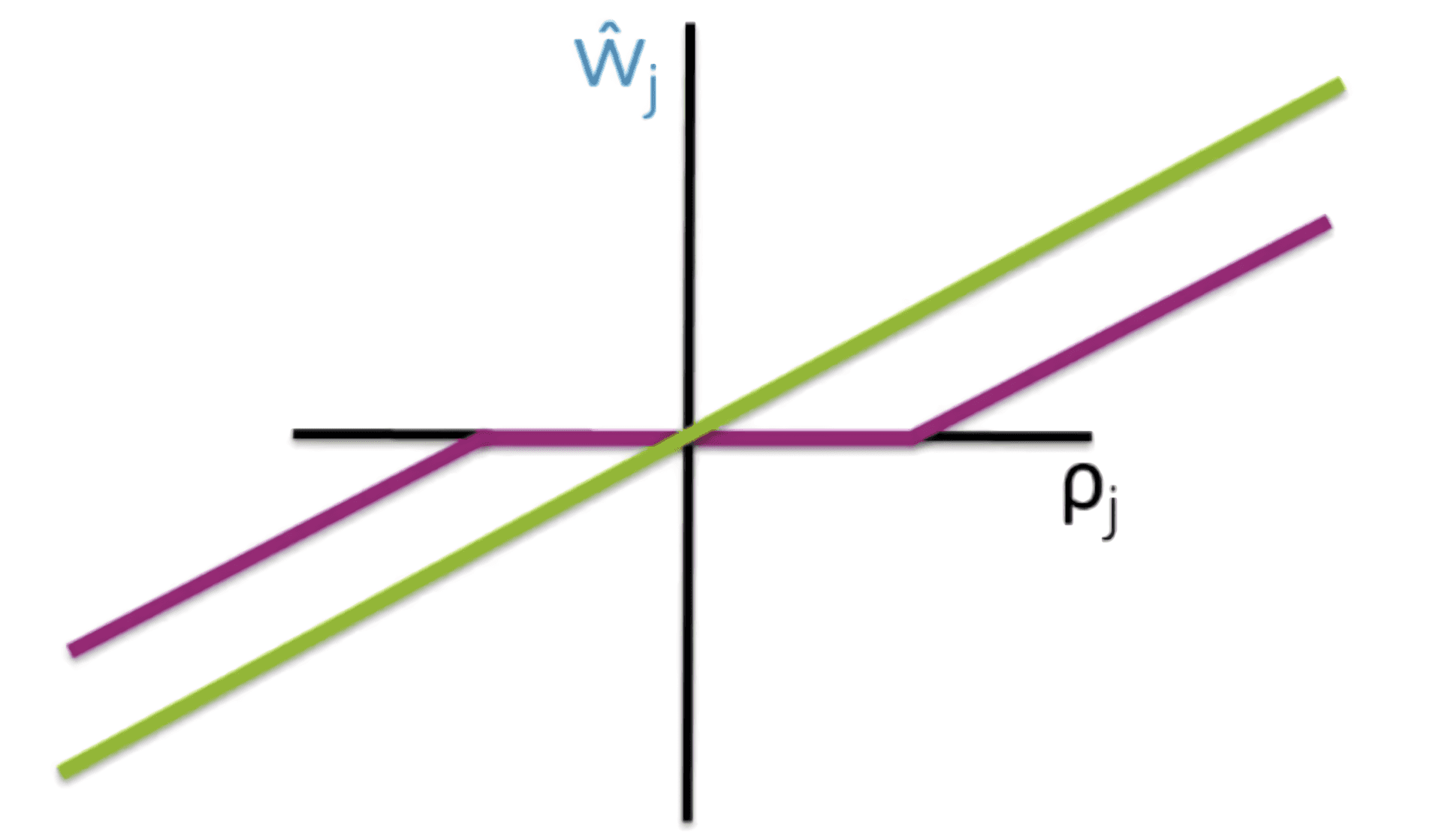

Hence, substituting the subgradient from the formula above, we get:

Now, if we substitute the exact gradient in coordinate descent formula with subgradient, we get:

Stoppage criterion: Lasso dual problem and duality gap

Now, the stoppage criterion for the Lasso procedure is formulated in terms of pair of primal-dual optimisation problems.

While is considered a parameter of the system, we introduce a variable substitution .

We present this variable substitution as a set of constraints for the optimisation problem and apply Lagrange multipliers to it:

Lagrange dual problem is to find

First, split our Lagrangian into 2 terms that we optimize independently:

Term 1 analysis

Let us take the partial derivatives of term 1 in :

, and

Substituting this back into term 1, we get:

Term 2 analysis

Let us take the partial derivatives of term 2 in :

(you can get term to be negative, and it will outgrow if )

Hence, if any of terms , , otherwise, .

Taking the terms together and solving the Lagrange dual

The optimal value of Lagrangian, corresponding to the solution of this problem is:

So, we’ve come to a dual optimization problem: we need to achieve the maximum of dual function:

,

given a set of inequality constraints (or, in an infinity-norm notation ).

Optionally, we can subtract to the function, as it does not affect the minimization, as this form is more convenient for geometric interpretation. This results in:

, subject to

The problem is convex and by Slater’s condition if the solution of primal problem is feasible, the duality is strict.

Hence, duality gap converges to 0, and we can use it as a stoppage criterion in our Lasso problem.

Geometric interpretation of Lagrange dual

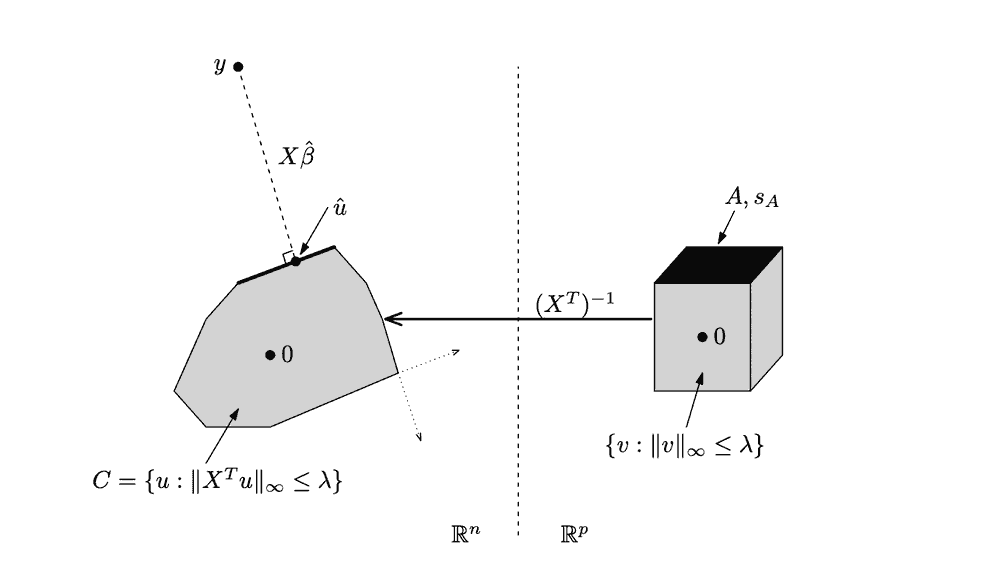

The last form of Lasso dual , subject to allows for a direct, beautiful and intuitive geometric interpretation, provided by Ryan Tibshirani (tutorial page 11, lecture 18 slides).

Left-hand side: if we were to multiply those dual vectors by inverse of matrix, we go back into the primal space . In primal space the cube of valid dual vectors () is transformed into a convex polytope, where the image of each vector () is denoted . By solving the Lasso regression problem we find such a direction that projection of the vector onto this polytope along direction is a specific vector , such that the length of the normal to this projection is minimal.

Alternatives: preconditioned conjugate gradients, quadratic programming, safe rules

TODO

References

- https://webdoc.agsci.colostate.edu/koontz/arec-econ535/papers/Tibshirani%20(JRSS-B%201996).pdf - the original paper by Robert Tibshirani

- https://hastie.su.domains/Papers/glmnet.pdf - GLMnet implementation paper by Friedman, Hastie and Tibs

- https://web.stanford.edu/~boyd/papers/pdf/l1_ls.pdf - Kim, Gorinevsky paper on faster solution PCG, dual problem etc.

- https://hal.archives-ouvertes.fr/hal-01833398/document - Celer, an alternative recent Lasso solver

- https://stephentu.github.io/blog/convex-optimization/lasso/2016/08/20/dual-lasso-program.html - lasso dual derivation by Stephen Tu

- https://www.coursera.org/lecture/ml-regression/deriving-the-lasso-coordinate-descent-update-6OLyn - a great lecture on exact solution of Lasso regression with coordinate descent by Emily Fox/Carlos Guestrin

- https://davidrosenberg.github.io/mlcourse/Archive/2019/Lectures/03c.subgradient-descent-lasso.pdf - a good presentation on subgradient descent by David Rosenberg

- https://davidrosenberg.github.io/mlcourse/Notes/convex-optimization.pdf - extreme abridgement of Boyd-Vanderberghe’s Convex Optimization

- https://xavierbourretsicotte.github.io/lasso_derivation.html - excellent blog post on Lasso derivation

- https://machinelearningcompass.com/machine_learning_math/subgradient_descent/ - a great post by Boris Giba on subgradient descent

- https://en.wikipedia.org/wiki/Slater%27s_condition - Slater’s condition

- https://www.eecis.udel.edu/~xwu/class/ELEG667/Lecture5.pdf - amazing lecture with graphical explanation of strong/weak duality

- https://www.stat.cmu.edu/~ryantibs/statml/lectures/sparsity.pdf - Ryan Tibshirani paper/tutorial with nice geometrical interpretation of Lasso dual

- https://www.cs.cmu.edu/~ggordon/10725-F12/slides/18-dual-uses.pdf - same material by Geoff Gordon & Ryan Tibshirani, but as a presentation with updates on safe rules

- https://www.ias.ac.in/article/fulltext/reso/023/04/0439-0464 - intro to Lasso by Niharika Gauraha

- https://sites.stat.washington.edu/courses/stat527/s13/readings/osborneetal00.pdf - on Lasso and its dual by Osborne, Presnell and Turlach

- http://www.aei.tuke.sk/papers/2012/3/02_Buša.pdf - paper on solving quadratic programming problem with L1 norm by variable substitution by Jan Busa, alternative approach to convex optimization

- http://proceedings.mlr.press/v37/fercoq15.pdf - paper on improing safe rules for duality gap (improvement on the seminal 2012 el Ghaoui work on safe rules - fast screening techniques)

- https://people.eecs.berkeley.edu/~elghaoui/Teaching/EE227A/lecture8.pdf - el Ghaoui lecture on Lasso

Written by Boris Burkov who lives in Moscow, Russia, loves to take part in development of cutting-edge technologies, reflects on how the world works and admires the giants of the past. You can follow me in Telegram